Export last hour CSV object log file to external or local FTP server from LM

Example: export last hour CSV object log file to external or internal FTP server from LogicMachine

Task

Once an hour, make CSV file with all objects logs and send to external FTP server with IP 192.168.1.11, login ‘ftplogin‘, password ‘ftppassword‘. In case you want to download the file manually from LM local FTP server, it is available in directory /home/ftp

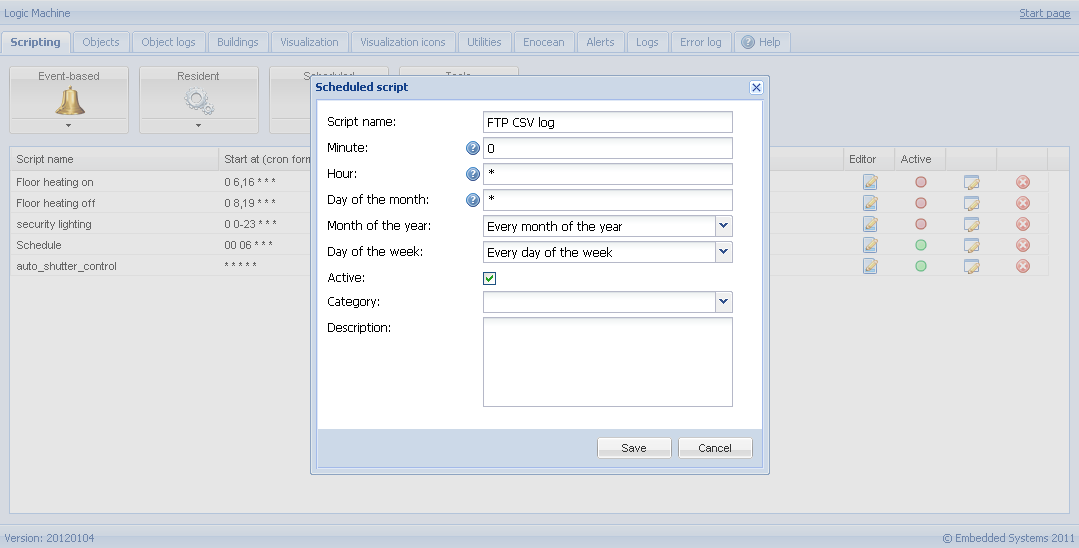

Scheduled program

In Scripting -> Scheduled add the script which will run once an hour and add following code in Script editor.

- require('socket.ftp')

-

- -- ftp file

- ftpfile = string.format('ftp://ftplogin:ftppassword@192.168.1.11/%s.csv', os.date('%Y-%m-%d_%H-%M'))

- -- get past hour data (3600 seconds)

- logtime = os.time() - 60 * 60

-

- -- list of objects by id

- objects = {}

-

- -- objects with logging enabled

- query = 'SELECT address, datatype, name FROM objects WHERE disablelog=0'

- for _, object in ipairs(db:getall(query)) do

- objects[ tonumber(object.address) ] = {

- datatype = tonumber(object.datatype),

- name = tostring(object.name or ''),

- }

- end

-

- -- csv buffer

- buffer = { '"date","address","name","value"' }

-

- -- get object logs

- query = 'SELECT src, address, datahex, logtime, eventtype FROM objectlog WHERE logtime >= ? ORDER BY id DESC'

- for _, row in ipairs(db:getall(query, logtime)) do

- object = objects[ tonumber(row.address) ]

-

- -- found matching object and event type is group write

- if object and row.eventtype == 'write' then

- datatype = object.datatype

-

- -- check that object datatype is set

- if datatype then

- -- decode data

- data = knxdatatype.decode(row.datahex, datatype)

-

- -- remove null chars from char/string datatype

- if datatype == dt.char or datatype == dt.string then

- data = data:gsub('%z+', '')

- -- date to DD.MM.YYYY

- elseif datatype == dt.date then

- data = string.format('%.2d.%.2d.%.2d', data.day, data.month, data.year)

- -- time to HH:MM:SS

- elseif datatype == dt.time then

- data = string.format('%.2d:%.2d:%.2d', data.hour, data.minute, data.second)

- end

- else

- data = ''

- end

-

- -- format csv row

- logdate = os.date('%Y.%m.%d %H:%M:%S', row.logtime)

- csv = string.format('%q,%q,%q,%q', logdate, knxlib.decodega(row.address), object.name, tostring(data))

-

- -- add to buffer

- table.insert(buffer, csv)

- end

- end

-

- -- upload to ftp only when there's data in buffer

- if #buffer > 1 then

- result, err = socket.ftp.put(ftpfile, table.concat(buffer, '\r\n'))

- end

-

- -- error while uploading

- if err then

- alert('FTP upload error: %s', tostring(err))

- end

Write to existing file on FTP

Here is example if you want to write to existing file log.csv which is located on FTP 192.168.1.40

- require('socket.ftp')

-

- logtime = os.time() - 60 * 60

- objects = {}

-

- query = 'SELECT address, datatype, name FROM objects WHERE disablelog=0'

- for _, object in ipairs(db:getall(query)) do

- objects[ tonumber(object.address) ] = {

- datatype = tonumber(object.datatype),

- name = tostring(object.name or ''),

- }

- end

-

- buffer = {}

-

- query = 'SELECT src, address, datahex, logtime, eventtype FROM objectlog WHERE logtime >= ? ORDER BY id DESC'

- for _, row in ipairs(db:getall(query, logtime)) do

- object = objects[ tonumber(row.address) ]

-

- if object and row.eventtype == 'write' then

- datatype = object.datatype

-

- if datatype then

- data = knxdatatype.decode(row.datahex, datatype)

-

- if datatype == dt.char or datatype == dt.string then

- data = data:gsub('%z+', '')

- elseif datatype == dt.date then

- data = string.format('%.2d.%.2d.%.2d', data.day, data.month, data.year)

- elseif datatype == dt.time then

- data = string.format('%.2d:%.2d:%.2d', data.hour, data.minute, data.second)

- end

- else

- data = ''

- end

-

- logdate = os.date('%Y.%m.%d %H:%M:%S', row.logtime)

- csv = string.format('%q,%q,%q,%q', logdate, knxlib.decodega(row.address), object.name, tostring(data))

- table.insert(buffer, csv)

- end

- end

-

- if #buffer > 1 then

- data = table.concat(buffer, '\r\n')

- res, err = socket.ftp.put({

- host = '192.168.1.40',

- user = 'ftp',

- password = 'ftp',

- command = 'appe',

- argument = 'log.csv',

- source = ltn12.source.string(data)

- })

- end

-

- if err then

- alert('FTP upload error: %s', tostring(err))

- end

Write only specific object logs instead of full list

Create a scheduled script which runs each 10 minutes and fill the table with required object values:

- data = storage.get('myobjectlogs', {})

-

- table.insert(data, {

- ['time'] = os.time(),

- ['counter-1'] = grp.getvalue('1/1/1'),

- ['counter-2'] = grp.getvalue('1/1/2'),

- })

-

- storage.set('myobjectlogs', data)

Local FTP CSV storage

From the upper script do the following changes:

1. Replace this:

- require('socket.ftp')

-

- -- ftp file

- ftpfile = string.format('ftp://ftplogin:ftppassword@192.168.1.11/%s.csv', os.date('%Y-%m-%d_%H-%M'))

With this:

- ftpfile = string.format('/home/ftp/%s.csv', os.date('%Y-%m-%d_%H-%M'))

2. Replace this:

- -- upload to ftp only when there's data in buffer

- if #buffer > 1 then

- result, err = socket.ftp.put(ftpfile, table.concat(buffer, '\r\n'))

- end

-

- -- error while uploading

- if err then

- alert('FTP upload error: %s', tostring(err))

- end

With this:

- if #buffer > 1 then

- data = table.concat(buffer, '\r\n')

- io.writefile(ftpfile, data)

- end

3.Create a scheduled script that runs once a day to remove old logs (60 is a number of days to keep old logs):

- function cleanup(dir, days)

- local now, files, mtime, delta

-

- now = os.time()

- files = io.ls(dir) or {}

-

- for _, file in ipairs(files) do

- file = dir .. '/' .. file

- _, mtime = io.stat(file)

-

- if mtime then

- delta = (now - mtime) / 86400

- if delta > days then

- os.remove(file)

- end

- end

- end

- end

-

- cleanup('/home/ftp', 60)